In the ever-evolving landscape of artificial intelligence,large language models (LLMs) have emerged as the architects of a new era in machine learning. These models, with their vast neural networks and billions of parameters, have demonstrated an uncanny ability to generate human-like text, answer complex questions, and even engage in creative storytelling. But what if we could teach these models not just to predict the next word, but to understand and follow instructions with precision? Enter instruction pretraining—a groundbreaking approach that aims to bridge the gap between raw language generation and task-specific execution. By fine-tuning llms on a diverse array of instructional datasets, researchers are unlocking the potential for models to perform tasks with greater accuracy, adaptability, and nuance. This article delves into the fascinating world of instruction pretraining, exploring its methodologies, challenges, and the transformative impact it could have on the future of AI.

The Foundations of Instruction Pretraining for Large Language models

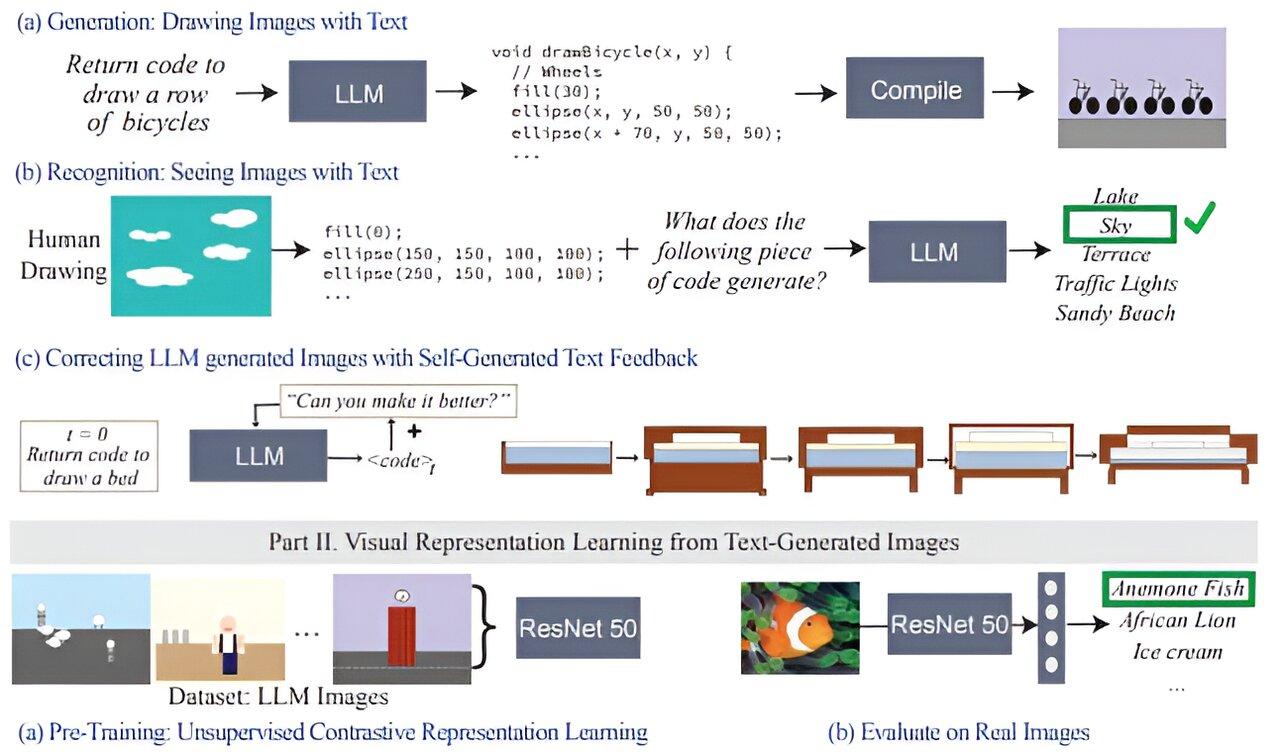

Instruction pretraining has emerged as a transformative approach to enhancing the capabilities of large language models (LLMs). By leveraging instruction-response pairs, this method enables models to learn from structured, task-specific data, bridging the gap between raw pretraining and fine-tuning.The framework, as proposed in recent research, involves augmenting massive raw corpora with synthesized instruction-response pairs, which are generated using efficient, open-source models. this approach not only improves the model’s ability to generalize across tasks but also ensures scalability, making it feasible to apply to datasets as large as 15 trillion tokens [[1]].

Key elements of instruction pretraining include:

- Supervised Multitask Learning: Models are trained on diverse tasks together, enhancing their adaptability and performance across domains.

- Efficient Data Synthesis: Instruction-response pairs are generated using lightweight, open-source synthesizers, ensuring cost-effectiveness and scalability [[2]].

- Publicly available Data: Pretraining relies on publicly accessible datasets, maintaining transparency and reducing dependency on proprietary resources.

| component | Benefit |

|---|---|

| Instruction-Response Pairs | Enhances task-specific understanding |

| Open-Source Synthesizers | Reduces computational costs |

| Multitask pretraining | Improves generalization |

unlocking the Potential of task-specific Data in Pretraining

Task-specific data has emerged as a game-changer in the pretraining of large language models (LLMs). By incorporating domain-specific instructions during the pretraining phase, models can develop a stronger foundation for understanding and executing complex tasks. This approach not only enhances the model’s ability to generalize across diverse scenarios but also reduces the need for extensive labeled datasets later. For instance, instruction pretraining allows LLMs to internalize patterns and structures that are crucial for tasks like summarization, translation, and question-answering, making them more adaptable and efficient [[1]].

One of the key advantages of this method is its cost-effectiveness. Instead of relying solely on massive datasets, researchers can generate synthetic instruction data tailored to specific tasks. This not only accelerates the training process but also ensures that the model aligns closely with real-world applications. Below is a simple breakdown of how task-specific data impacts pretraining:

- Improved Alignment: models trained with task-specific instructions exhibit better alignment with user expectations.

- Reduced Overhead: Less reliance on labeled data lowers computational and financial costs.

- Enhanced Adaptability: pretraining with diverse instructions prepares models for a wide range of downstream tasks.

| Benefit | Impact |

|---|---|

| Alignment | Better task-specific performance |

| Cost Efficiency | Lower resource requirements |

| Adaptability | Broader application scope |

By leveraging task-specific data, instruction pretraining bridges the gap between general-purpose LLMs and specialized models, unlocking new possibilities for AI-driven solutions [[3]].

Strategies for Balancing Generalization and Specialization in LLMs

Balancing generalization and specialization in large language models (LLMs) is a nuanced challenge that requires strategic approaches. One effective method is two-stage fine-tuning, where the model is first fine-tuned on a broad dataset to retain its general problem-solving capabilities, followed by targeted fine-tuning on specialized tasks.

This approach mitigates the risk of over-specialization, ensuring the model remains adaptable across diverse domains [[3]]. Additionally, leveraging contextual partitioning during training can help LLMs dynamically adapt to different linguistic contexts without extensive computational overhead. By segmenting tasks into contextually relevant partitions,the model can maintain a balance between broad applicability and task-specific precision [[2]].

Another strategy involves task-relevant unit analysis,which identifies specific units within the model responsible for particular cognitive tasks.

This neuroscientific lens allows for targeted adjustments, enhancing specialization without compromising the model’s overall generalization capabilities [[1]]. Below is a simple table summarizing key strategies:

| Strategy | Benefit |

|---|---|

| Two-stage fine-tuning | Preserves generalization while enabling task-specific adaptation |

| Contextual partitioning | Reduces computational costs while maintaining adaptability |

| task-relevant unit analysis | Enhances precision without over-specialization |

By integrating these strategies, developers can create LLMs that excel in both broad and niche applications, ensuring they remain versatile yet precise in their problem-solving capabilities.

Practical Recommendations for Optimizing Instruction Pretraining Workflows

To optimize instruction pretraining workflows,it’s essential to focus on data quality and scalability. Start by leveraging open-source models to generate high-quality instruction-response pairs, ensuring they cover a diverse range of tasks. for instance, synthesizing 200M pairs across 40+ task categories has proven effective in enhancing model performance [[3]].

Additionally,prioritize efficient data augmentation techniques to enrich raw corpora without compromising computational resources. This approach not only improves the model’s generalization but also reduces the need for extensive fine-tuning later.

Another critical aspect is model architecture and training strategies. consider using multitask learning frameworks that integrate supervised and unsupervised methods, as this combination has shown promising results in achieving competitive performance even with smaller models [[2]]. Below is a simple table summarizing key workflow optimizations:

| Focus Area | Advice |

|---|---|

| Data Generation | Use open-source models for scalable instruction synthesis. |

| Task Diversity | Cover 40+ task categories for robust pretraining. |

| Training Strategy | Combine supervised and unsupervised multitask learning. |

by implementing these strategies, you can streamline your pretraining workflows and achieve better results with fewer resources.

In summary

As we stand on the precipice of a new era in artificial intelligence, the journey of instruction pretraining for large language models (LLMs) unfolds like a vast, uncharted landscape.

Each step forward reveals both the immense potential and the intricate challenges of teaching machines to understand and generate human-like text. While the road ahead is still shrouded in questions—about ethics, scalability, and the true nature of machine understanding—one thing is clear: the seeds of innovation planted today will shape the forests of tomorrow.

Whether these models become tools of empowerment, creativity, or even companionship, their evolution is a testament to humanity’s relentless pursuit of knowledge. The story of instruction pretraining is far from over; it is a narrative still being written, one prompt at a time.